Ethical Moderation Series Part 2:

How Ethical Are Your Content Moderation Practices?

14 Questions To Find Out

This series addresses content moderation from a user-generated content perspective, where “moderators” are social media or community managers working for commercial entities. While we reference research from studies about moderators hired by social media platforms, we do not address the many issues they face here. If you’re interested in learning more about social media platform moderators, we recommend starting with this Financial Times article from May 2023.

In Part 1 of our 3-part Ethical Moderation Series, we defined Ethical Moderation and explained why it’s important for all content and digital teams to consider. We learned that by embracing ethical moderation, you commit to:

Protecting your brand reputation

Protecting your online communities and your biggest fans

Safeguarding the psychological wellbeing of those working diligently behind the scenes to build your brand and communities

Recognizing the importance of building a safer, more inclusive and respectful digital world for all

In Part 2, we outline key questions to ask when evaluating your own ethical moderation practices.

14 Questions To Assess If Your Content Moderation Practices Are Ethical

These questions can help assess your organization's commitment to ethical moderation and creating a responsible and compassionate online space for users and moderators alike. These are thought starters, and there are no right answers. Take a few minutes to jot down your answers, and try not to leave any questions unanswered, even if your answer is “I don’t know”.

Community Wellbeing

1. How do you handle issues like hate speech and cyberbullying within your online communities?

2. Do you have a clear Community Standards Policy around harassment and bullying? Is it used consistently?

Content Team Support

3. Do you actively seek feedback from your content moderation team regarding the challenges they face and the impact on their wellbeing?

4. How do you address moral dilemmas or ethical concerns that moderators might encounter in their roles?

5. Daily, how do you actively support your content moderation team?

6. Do you have policies or practices in place to prevent burnout or other negative mental health impacts among your content moderators? If so, what are they? And are they easily accessible for your team?

Automation, AI and 3rd-Party Software

7. Do you outsource content moderation tasks to AI either with a 3rd-party tool or your own machine learning models?

8. If so, how do you ensure the language models you’re using are unbiased, fair, and represent your community?

9. If you’re using 3rd-party software or your own models, how does the software protect users from seeing potentially harmful or offensive content, such as blurring text or issuing warnings?

Outsourcing and Fair Labour:

10. Do you outsource content moderation tasks to other humans? If so, how do you ensure fair labour practices and mental health support for your moderators, especially in economically challenged regions?

11. What steps have you taken to minimize the reliance on human outsourcing for moderation?

Transparency and Guidelines:

12. How clear and consistent are your content moderation guidelines and policies?

13. Can you explain your content moderation decision-making process?

14. Do your actions match your guidelines?

“It’s a balancing act that juggles the wellbeing of a community and providing support for a moderation team. Being ethical extends to how automation, AI, and third-party softwares are utilized, requiring fair language models and user protection against offensive content. ”

Having A Solid Ethical Moderation Practice Is Crucial

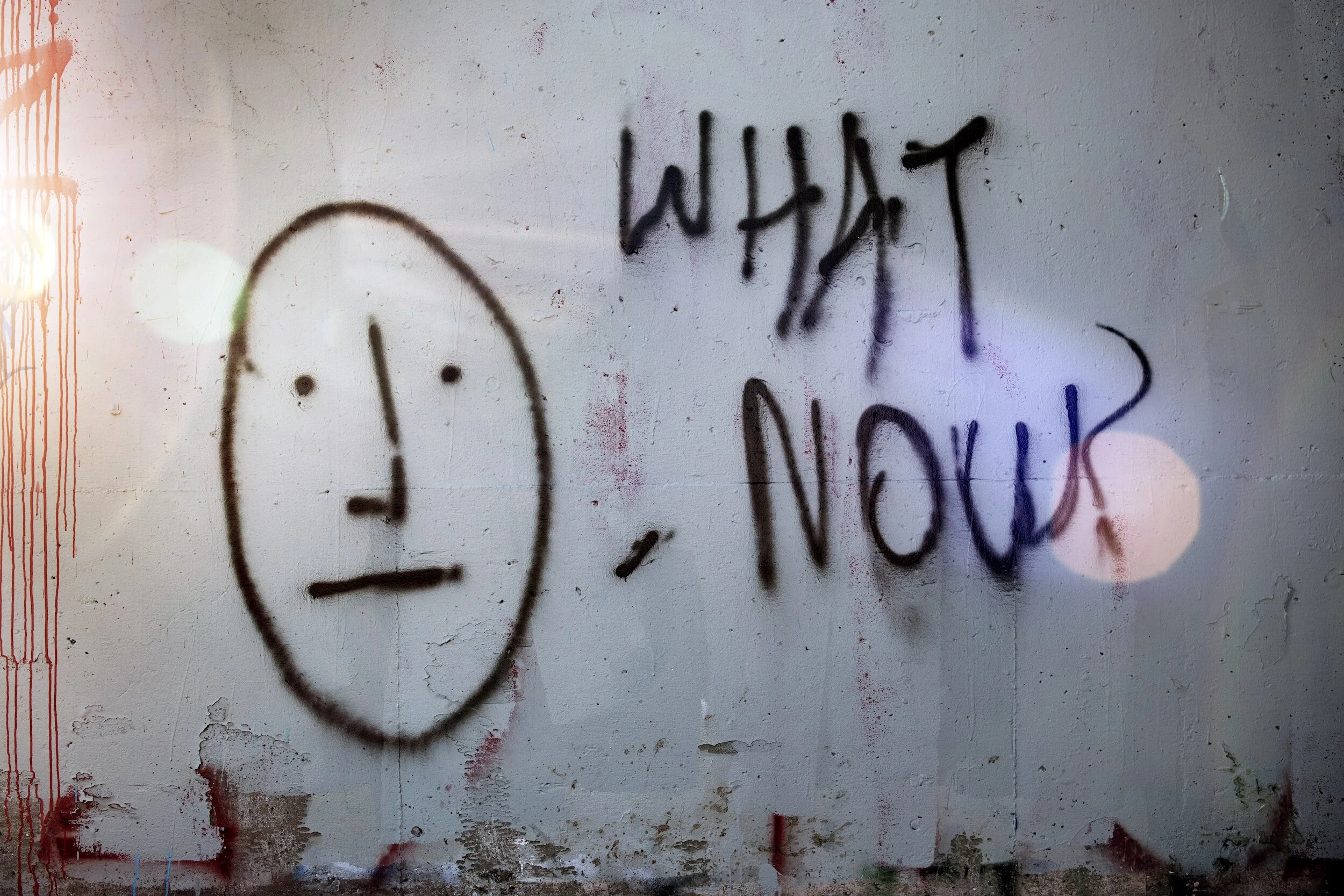

So now what?

Well, while there are no right answers to the above questions, if you were unable to answer more than half the questions, it’s time to rethink how you manage your digital communities to do as little harm as possible to your community, your brand and your team.

Why?

Because solid ethical moderation practices are imperative in the digital age. It goes beyond just sifting through content - it's a balancing act that juggles the wellbeing of a community and providing robust support for a moderation team. Being ethical extends to how automation, AI, and third-party softwares are utilized, requiring fair language models and user protection against offensive content.

If outsourcing is in the picture, it is vital to ensure fair labour practices and mental health support for overseas teams. And amid all these considerations, transparency is paramount. Clear guidelines and open communication lines preserve the trust between the moderation team, the wider community, and anyone else in between.

So whether you're a content director for a large brand or running a small online forum, take a beat and ask yourself these questions. Establishing strong, ethical moderation practices helps create a safer, inclusive online world. It's not just about upholding standards, it's about making your digital community a place everyone can enjoy.

Creating a Safe and Inclusive Online Community: Areto Labs' Commitment

At Areto Labs, we believe that our commitment to ethical moderation sets us apart from our competitors. We are proud to provide a safe and respectful online community for all our users, and we believe that this is one of the key reasons why people choose to use our platform. We are committed to creating a community that is inclusive, respectful, and safe for everyone, and we will continue to prioritize ethical principles and values in all our moderation practices.